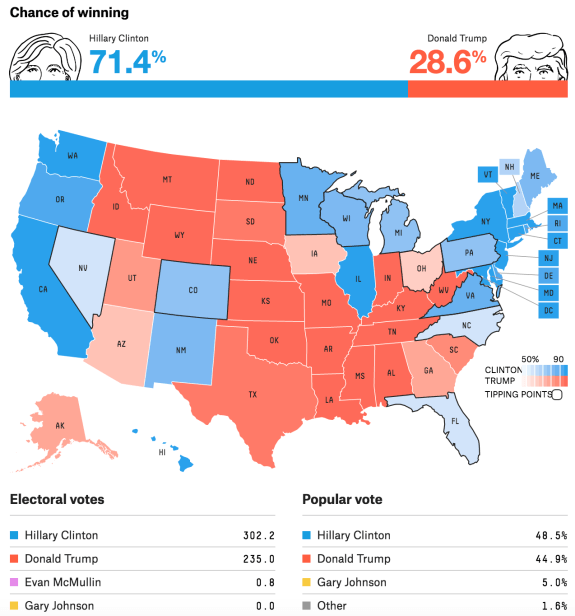

Many avid media observers were shocked by Donald Trump’s victory in the 2016 United States presidential election. For weeks, media outlets had reported on polls predicting a swift Trump defeat. On election day, FiveThirtyEight, a data journalism website, conservatively projected that there was 71 per cent chance of Hillary Clinton winning. Even more confidently, Reuters, an international news agency, predicted a whopping 90 per cent chance of a Clinton victory.

Despite their convictions, the predictions were wrong. To understand how the polls could be so inaccurate, it’s important to understand how polls are conducted.

“[Polling is] probability sampling, that means that the polling company […] will choose a random sample that is supposed to be statistically representative of the population as a whole,” McGill Assistant Professor of Sociology, who specializes in political processes, Barry Eidlin explained. “[The sample size] can be as small as 1,000 [people], but they’re usually around the 3,000 range. Then, with that randomly generated sample, [… the polling company] will ask a series of standardized questions on a survey. Then, they use those to make estimations about the opinions of the populations as a whole.”

While many media sites like Reuters conducted their own polls, FiveThirtyEight aggregated results from multiple state and national polls from across the United States. FiveThirtyEight’s polling analysis was then put through its election simulator to test for a variety of outcomes.

Polling companies have different methodologies to generate randomized samples. The Pew Research Center conducts their poll by selecting a randomized group of landline and cellphone numbers in the 50 states and the District of Columbia. On the other hand, a NBC News/SurveyMonkey poll relies on a sample of the 3 million people who use the SurveyMonkey online polling system. These two methods of polling have different challenges and can yield different results.

“These days, the problem is [that …] lots of people don’t have landlines anymore,” Eidlin said. “There’s a bit of crisis in polling strictly at a logistical level in that response rates have collapsed so much, right at the moment when we have a lot of very sophisticated statistical techniques to make sure that our samples are good and the estimations [the polling companies] are using are good.”

While logistical and technological difficulties could have been sources of error, another possibility for why the polls incorrectly predicted the election was what is known as the “Shy Trumper” hypothesis.

“There’s nothing conclusive, but clearly all the polls severely underestimated the level of Trump support,” Eidlin said. “One of the ways in which that could’ve happened is by a lot of people who actually voted for Trump saying they were undecided [….] There’s always a problem in any kind of survey you are doing with what is called ‘appropriateness bias,’ […] in that if you hold opinions that you know are not socially acceptable, you’re not that likely to tell a stranger on the phone your unpopular opinions.”

The final hypothesis as to why the polls got it so wrong was simply that there were many last minute swing voters that the pollsters never recorded. Whichever hypothesis is correct, one fact remains: The pollsters and their aggregates’ predictions were wrong across the board.

“Pollsters are well aware that the profession faces serious challenges that this election has only served to highlight,” Pew Research Center wrote in a post-election article. “At its best, polling provides an equal voice to everyone and helps to give expression to the public’s needs and wants in ways that elections may be too blunt to do. That is why restoring polling’s credibility is so important, and why we are committed to helping in the effort to do so.”