Imagine a world where every sound makes you want to move. Why is it that some sounds, like the rhythm of a song, spark an irresistible urge to dance while others, like everyday conversation, leave us still and focused?

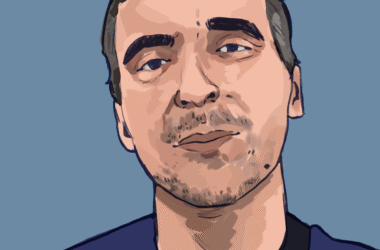

Benjamin Morillon, who completed his postdoc at Montreal Neurological Institute-Hospital (The Neuro), tackled this puzzle in his recent lecture at the Feindel Brain and Mind Seminar Series, revealing the ways our brain distinguishes between music and language and how these differences shape our responses to these sonic stimuli.

On Oct. 1, Morillon presented his work on the neural dynamics that underlie music perception and speech comprehension. His talk, Neural Dynamics and Computations Constraining Music and Speech Processing, explored the intricate ways our brains process these auditory experiences.

Morillon’s talk began with a question many of us might not have considered before: Why do we instinctively dance to music?

“Why do humans dance? It’s completely weird,” Morillon questioned. “You have a vibration in the ear that you perceive thanks to your sensory systems, and then you spontaneously move yourself. It’s completely ridiculous.”

Central to his research is the idea that the brain’s auditory and motor systems work together to anticipate the next beat in a song. This process of “predictive timing” is what causes us to naturally want to move to music.

“Dance is the expression of an oscillatory entrainment. Your body is entrained to the sound of music and is anticipating the next beat within the audio-motor loop,” Morillon explained in his talk.

This entrainment happens within the dorsal auditory pathway, a brain pathway that helps determine the source and location of sounds. While auditory regions track the rhythm of a song, the motor system uses this information to anticipate when the next beat will occur, leading to the desire to move in time with the beat. Interestingly, this response works best for rhythms that occur around 2Hz—or two beats per second—a frequency that aligns with natural movement patterns like walking or nodding.

“The motor system is actually quite simple; you can do stuff at 2Hz, and you can anticipate things in time at 2Hz. That’s quite limited for cognitive function that was evolutionarily selected for its flexibility,” Morillon remarked.

Additionally, the motor system engages more when music follows a moderate level of complexity. If a song is too simple, we lose interest; if it is overly complex, it becomes difficult for us to synchronize our movements. This “sweet spot” of syncopation triggers a “groove”—a sense of wanting to move to the music.

After discussing music, Morillon transitioned to another fundamental human behaviour: Speech comprehension. Like music, speech engages specific brain regions along the auditory pathway. Speech comprehension involves the brain adapting to different acoustic features, particularly when speech is presented in challenging conditions, such as when it is compressed, sped up, or layered with background noise.

Morillon’s findings also touch on the concept of “channel capacity”—the brain’s ability to manage and process multiple streams of information simultaneously, such as different acoustic or linguistic features in speech.

Morillon demonstrated that different features of speech, including syllabic rate and pitch, impact our comprehension to varying degrees, with the syllabic rate being one of the most influential factors.

Through human intracranial recordings, Morillon’s team found that the auditory cortex processes syllables and phonemes at different time scales, allowing for parallel processing of speech signals. While the brain is decoding the syllabic rhythm of speech, it is simultaneously processing finer phonemic details—a remarkable example of neural efficiency.

Morillon’s research sheds light on the fascinating ways our brains juggle the rhythms of music and speech, offering a glimpse into how these neural processes shape our everyday experiences—whether we’re moving to the beat of a song or engaging in a conversation.